Implementing a Locked-Down Generative AI Model to Establish Validity, Reliability, and Fairness in Certification of Persons

Artificial Intelligence (AI) is increasingly being used to perform several certification processes to include scheme development, application management, examination development, and invigilation. For an AI model to demonstrate compliance to ISO/IEC 17024, it must be validated and meet established quality controls.

A key feature of an AI system is the capacity to learn based on training data on specified tasks by tracking performance measures. However, implementing a “locked down” AI model may be important when using it for certification processes. “AI lockdown” is not a standard term in AI and refers to different things based on context.

- Security Context: In cyber security, lockdown essentially means prevention of unauthorized access or threats.

- Controlled Usage: In this context, a locked down model means a version of AI that is restricted in capabilities in a sandbox environment to limit access to external data.

- Ethical and Regulatory Framework: Protocols established by a regulatory body that outlines how AI models should be developed and used to ensure compliance and ethics.

When a certification body for persons uses generative AI, it should consider all three aspects of “AI lockdown” to ensure that the model meets the requirements for security, controlled usage, and regulatory compliance. The focus of this article is on the use of AI lockdown in the context of controlled usage and complying with protocols established by regulatory bodies. For acceptance of an AI model for certification of persons, it must be locked down to prevent unauthorized access or threat. The term model is used to represent an algorithm that has been trained to complete a specific task.

For an AI model to be valid, reliable, and fair, the following needs to be addressed.

- The AI model must demonstrate that it is valid and fit for purpose. Validity in AI generally refers to the model’s ability to perform according to the established metrics. Established validation methods include peer reviews and benchmarking. Validation can be done by conducting a study that compares a model’s performance against established metrics. For example, one could compare a cut score study performed by an AI model with a cut score study by subject matter experts.

- The AI model must meet criteria for reliability/reproducibility. AI models that are developed based on limited or incorrectly sampled data sets would not be generalizable to the full spectrum of the population.

- The AI model must be adaptable to reflect the changes in the practice/job and changing work environment.

Use of AI Lockdown

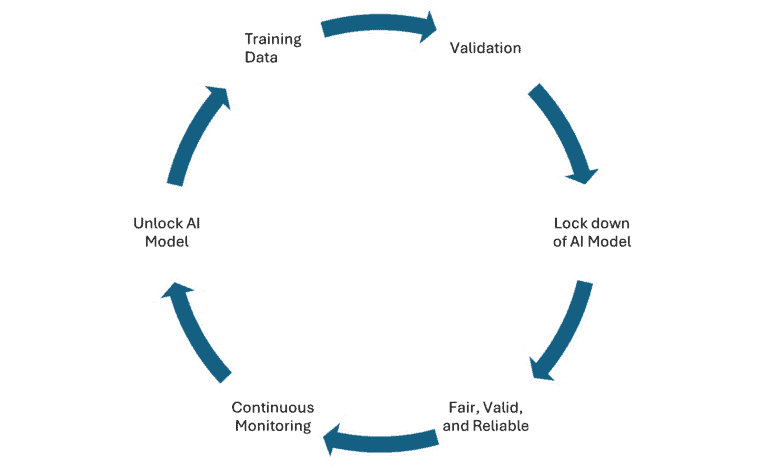

“Locked-Down Generative AI” sounds like an oxymoron. However, it is a necessary element for several certification processes. For example, to perform a validation study, the model needs to be locked down for a certain time. This prevents a model from overfitting or using new data that is not representative of the overall distribution. Furthermore, locking down a system helps to maintain stability in prediction and deviation from validated performance. This is at the core of establishing reliability. When a model is locked down, the parameters remain unchanged from when the model was trained and tested. A model is then treated as “black box” that only considers input and output data. At predefined intervals, the “locked model” would need to be “unlocked” to undergo a new validation study with new data from new applicants, candidates, and examinees. This is important because any closed or locked platform deteriorates over time. To prevent atrophy, a model needs to be updated through human intervention and new data. The following figure presents the lifecycle of an AI validation process.

A certification body for persons can implement periodic lockdown through different techniques such as:

- Freezing Layers: Earlier layers of the models are frozen so that they do not update during training.

- Weight Clamping: This technique involves setting limits on how much the weights of the model can change after establishing their initial validity.

- Regularization Techniques: Techniques such as drop out during the training updates can serve as a lock down.

Risks in Use of AI in Certification of Persons

A certification body that uses generative AI must carry out a risk analysis. As an example, the new regulations in the European Union classify AI systems based on risk and impose stricter requirements on high-risk applications. On the other hand, U.S. FDA guidelines for medical devices focus on continuous learning while maintaining safety and efficacy. In some sense, the EU and FDA requirements are at odds with each other (this will be covered in another blog post).

In fields such as certification of persons, it is important to provide evidence that the use of generative AI ensures reliability, transparency, and trustworthiness in the system. The model must perform consistently over time based on established validity. This is essential to ensure that people who are certified are examined based on the same scheme requirements. For instance, if people who are examined today are being tested to a harder exam than people who were tested in the past, it would create a pool of certified individuals with different levels of competence.

Balancing Validity, Reliability, and Flexibility

In a sense, to leverage the full benefits of generative AI, the model needs to continuously learn from new data. However, to ensure consistency and reliability, the model needs to maintain some core characteristics. While too many restrictions would prevent a model from adapting to the changes in data landscape, too few restrictions will lead to volatile and inconsistent performance.

A certification body must have documented evidence for the deployed AI models. This includes documents relating to model design, training data, and model performance data. It must show that the training data was not inherently biased towards certain user groups. It is critical to ensure human oversight. Furthermore, certification decisions cannot be made by AI.

Finally, a certification body must ensure they have personnel with the required competence in AI. In addition, a certification body must perform regular internal and external audits to ensure ongoing compliance with the ISO/IEC 17024 standard.

Persons, people who need persons, are the luckiest persons in the world.

Good